The End of Solo AI: Why AI Teams Are Replacing AI Assistants in 2026

How multi-agent orchestration became the new standard for software development

The numbers shouldn’t surprise you anymore, but they still do.

Anthropic’s CEO confirmed that over 90% of Claude’s code is now AI-written. The Claude Code team lands 259 PRs in 30 days. One demonstration showed Claude Code matching a year-long Google engineering project in a single hour.

But here’s what most developers are missing: the breakthrough isn’t the AI model. It’s the orchestration layer that makes these results possible.

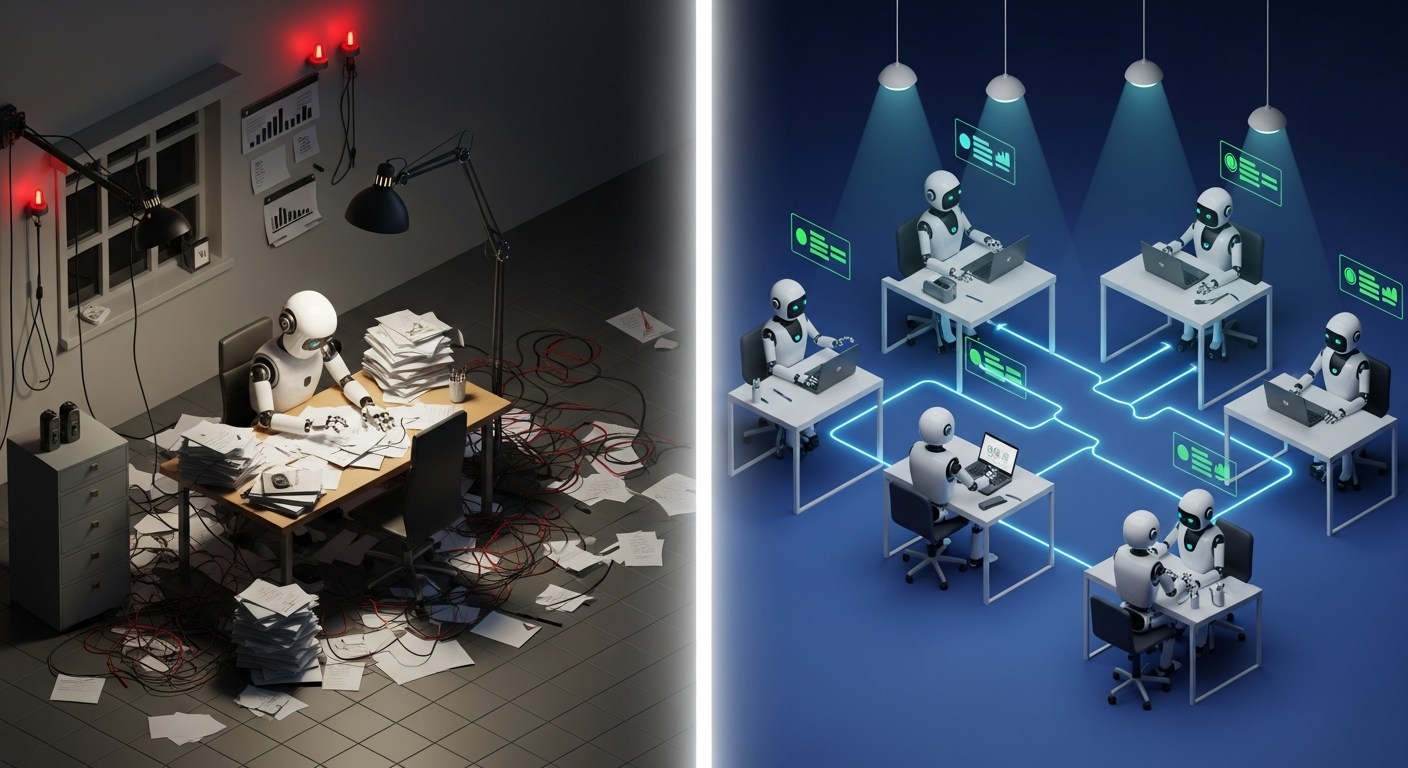

We’ve crossed from “AI assistant” to “AI development team.” And if you’re still using single-agent workflows, you’re already behind.

The Single-Agent Ceiling

Every developer using AI assistants hits the same walls:

Context fills up. Ask Claude to handle a complex refactoring, and you watch the context window fill with noise. By the time you need it to synthesize, the early context has been pushed out.

No specialization. Every task starts from scratch. There’s no “testing expert” or “documentation specialist”—just one generalist trying to context-switch between architecture and linting.

Serial processing. While your AI writes tests, it can’t simultaneously refactor. While it documents, it can’t validate. Everything queues behind everything else.

Multi-agent architectures solve all three. And the industry has noticed: 57% of organizations already deploy multi-step agent workflows. 81% plan to expand in 2026.

This isn’t coming. It’s here.

The Four-Agent Team Pattern

The simplest effective pattern mirrors human team structure:

The Architect owns system exploration, requirements analysis, and design authority. This agent reads the codebase, understands constraints, and creates the master coordination document.

The Builder focuses exclusively on implementation. Given clear specifications from the Architect, it writes features without getting distracted by testing or documentation concerns.

The Validator develops test suites, catches edge cases, and runs quality assurance. Separate context means it approaches the Builder’s code with fresh eyes—literally.

The Scribe handles documentation, code refinement, and guides. By the time it runs, implementation is stable, so documentation reflects reality.

These four agents coordinate through a shared Markdown file: MULTI_AGENT_PLAN.md. No complex framework required—just structured communication about tasks, assignments, and status.

One team using this pattern completed a week-long estimated project in two days. The pattern works because it mirrors how high-performing human teams operate: clear roles, shared context, regular communication, built-in quality checks.

The Verification Loop: Your 2-3x Multiplier

Boris Cherny, the creator of Claude Code, calls it “probably the most important thing to get great results”:

Give Claude a way to verify its work.

The data backs him up: verification loops improve quality by 2-3x. Here’s why.

When an agent can run tests, take screenshots, or execute validation scripts, it enters an iteration cycle. Failed test? Analyze, fix, retest. Screenshot doesn’t match design? Adjust, recapture, compare. This cycle continues until quality criteria are met.

Without verification, you get “vibe coding”—accepting AI output because it looks reasonable. With verification, you get systematic quality improvement.

Cherny’s team tests every UI change through a Chrome extension before merging. The agent opens a browser, tests the interface, and iterates until both the code works and the experience feels right. This isn’t manual QA automated—it’s AI that genuinely evaluates its own output.

PostToolUse hooks add another layer: automatic formatting and linting after every code generation. Claude usually produces clean code, but the hook handles the last 10% to avoid CI failures later.

The principle generalizes: any measurable quality signal you can expose to your agents becomes a feedback loop that compounds over sessions.

Context as Architecture

Here’s the insight that separates scaling developers from stuck ones: treat context as a managed resource, not a dumping ground.

Subagents implement this architecturally. Each runs in its own context window with a custom system prompt. High-volume operations—test suite runs, log parsing, documentation fetching—stay isolated in the subagent. Only distilled summaries return to the main conversation.

Consider the difference: run a full test suite in your main context, and thousands of lines of output consume your window. Run it in a subagent, and you get back “3 tests failed with these errors.” That’s the difference between context exhaustion and sustainable operation.

The built-in subagents in Claude Code optimize for this:

- Explore: Fast, read-only codebase search using the Haiku model

- Plan: Architecture research without implementation capability

- Bash: Terminal commands in separate context

- General-purpose: Complex multi-step tasks with full access

Custom subagents extend the pattern. A code reviewer subagent with read-only access. A debugger subagent with edit permissions. A data scientist subagent with SQL execution rights.

Each subagent is a Markdown file with YAML frontmatter defining its name, description, allowed tools, and model. Place them in .claude/agents/, and they’re automatically available. Share them via version control, and your whole team benefits.

The Meta-Agent Pattern

The most sophisticated orchestration doesn’t just run multiple agents—it has an agent managing agents.

The meta-agent analyzes requirements and creates dependency graphs. It determines which work can happen simultaneously without conflicts. It distributes tasks, coordinates results, and handles the inevitable merge conflicts when two agents touch adjacent code.

The meta-agent doesn’t write code. It leads the team that writes code.

One implementation uses Redis queues for task distribution and file locking to prevent simultaneous edits. Worker agents acquire locks, make changes, run validation, and release locks. A Vue.js dashboard tracks everything in real-time. The result: 10+ Claude instances working in parallel on the same codebase without stepping on each other.

This is where the enterprise frameworks like claude-flow operate. 54+ specialized agents coordinated through Byzantine fault-tolerant consensus protocols. Three queen types (Strategic, Tactical, Adaptive) directing eight worker categories. Smart routing sends simple tasks to fast models and complex work to Opus.

You don’t need all that to start. But knowing it exists shows where the ceiling is—much higher than a single chat window.

The CLAUDE.md Accumulator

Most teams treat CLAUDE.md as a reference document. The successful ones treat it as training data.

When Claude makes a mistake in your specific environment, you add it to CLAUDE.md. Now Claude knows not to repeat it. When you establish a convention, you document it. Now Claude follows it consistently.

The file accumulates. So does Claude’s effectiveness in your specific context.

Boris Cherny’s team tags @claude on coworkers’ pull requests to capture learnings. Every PR review becomes a potential CLAUDE.md update. Every edge case discovered becomes a permanent correction.

This creates compound advantage. Organizations with robust CLAUDE.md practices don’t just use AI better today—they use it better every week as the knowledge base grows.

Their CLAUDE.md currently spans 2.5k tokens. That’s not documentation overhead—that’s 2.5k tokens of project-specific intelligence that every Claude session inherits.

The Enterprise Reality

If you’re working at scale, the numbers matter:

- 80% of organizations report measurable economic impact from AI agents today

- 91% of enterprises use AI coding tools in production

- 46% cite integration with existing systems as their primary barrier

- 42% point to data access and quality issues

- 40% identify security and compliance concerns

Notice what’s missing? Capability concerns. The models are good enough. The barrier is connecting them to production systems securely.

The winning strategy is hybrid build-and-buy: 47% combine off-the-shelf agents with custom development. Pure buy (21%) lacks customization. Pure build (20%) lacks speed.

For ROI, the numbers speak: $50,000 monthly in engineering time replaced for $2,000 in compute costs. That’s not theory—that’s reported from production deployments running 10+ parallel agents.

What To Do Monday Morning

If you’re a developer:

Start simple. Run two Claude sessions in separate terminal tabs, each in its own git checkout. Give one the architecture task, the other the implementation. Watch how the separation clarifies both efforts.

Build verification into your next task. Write the tests first, then tell Claude to implement until they pass. Experience the 2-3x quality improvement firsthand.

Start a personal CLAUDE.md. Every time Claude does something wrong in your environment, document it. You’re building a compound asset.

If you’re a team lead:

Establish team-wide CLAUDE.md conventions. What style guidelines do you enforce? What patterns have failed before? What tools are required for your stack? Document them where Claude can see them.

Create shared slash commands for your common workflows. A /commit-push-pr command. A /run-tests command. A /code-review command. Store them in .claude/commands/ and commit them to version control.

Try the four-agent pattern on your next feature. Architect, Builder, Validator, Scribe. Shared MULTI_AGENT_PLAN.md. 30-minute sync intervals. Report back on what worked.

If you’re an executive:

Address integration before scaling. The pattern is clear: organizations struggle with connecting agents to existing systems, not with agent capability. Solve the connection problem first.

Track ROI like 80% of organizations already do. Time saved, defects caught, features shipped. You can’t expand what you can’t measure.

Prepare for role transformation. Your developers are becoming AI team leads. The skills that matter are shifting from coding to orchestration. Training and hiring should reflect this.

The Window Is Closing

The data is unambiguous: multi-agent orchestration has crossed from innovation to production practice.

When 57% are already using multi-step workflows and 81% plan to expand, you’re not evaluating a new trend. You’re deciding how quickly to catch up.

When the creator of Claude Code produces 259 PRs in 30 days, that’s not an aspirational benchmark. That’s your competition if you’re building developer tools.

When Claude Code matches a year-long Google project in one hour, the question isn’t whether AI can do serious engineering work. It’s whether you’re positioned to benefit.

The single-agent assistant model served us well. But its ceiling is now visible, and the teams that have broken through are pulling ahead.

The question isn’t whether to adopt AI orchestration. It’s how fast you can implement before it becomes table stakes.

Research compiled from 17 sources including Anthropic engineering documentation, enterprise surveys, framework repositories, and developer case studies.